Machines that listen

Algorithms are learning to recognise emotions in the voice. The main challenge is to transition from the lab to the real world.

One day, it might be worth cursing the computerised voice of a call centre to try and jump the queue. | Image: Manu Friederich

You call your bank or insurance company, only to be greeted by an automated message. For several protracted minutes, you are presented with a drawn-out and often redundant list of options. Your blood boils and eventually you can hold your tongue no longer: ‘Stupid system!’ But then, and as if by magic, an operator picks up the line. This is no longer a coincidence, because programs using artificial intelligence are now able to understand emotions, which enables companies to adapt their services.

At the University of Zurich, Sascha Frühholz, a professor of psychology who specialises in the neurosciences, is working on the automatic detection of human emotion. “Algorithms are becoming more effective, particularly in recognising the six principal emotions: anger, fear, joy, disgust, sadness and surprise. They still struggle when it comes to the more complex emotions such as shame and pride. But that’s not unlike humans, of course”.

Mixing methods

The main challenge for the machines is learning to be sufficiently generalised. “The training data are extremely specific”, says Frühholz. “Performance drops with a change in acoustic environment or language. Even if an algorithm can learn to understand anger in the voice of someone from Zurich, it won’t be so successful with someone from Geneva. Accuracy will drop even further with people from Asia, as the acoustic profiles of their languages are even further removed”.

To overcome this pitfall, Frühholz has experimented with the combination of supervised and unsupervised learning techniques. “What we did was first to train the algorithm with voice data labelled as angry or happy. We then introduced non-labelled data, allowing the system to build its independence”.

The rate of recognition has reached 63 percent, markedly better than using supervised or unsupervised learning alone (54–58 percent). Humans on the other hand are 85–90 percent accurate in determining the emotion in the voice of a person, adds Frühholz, who is also studying human auditory perception. “For both humans and algorithms, recognition accuracy depends to a large extent on the number of emotions that must be simultaneously discerned”.

Keeping our roads safe

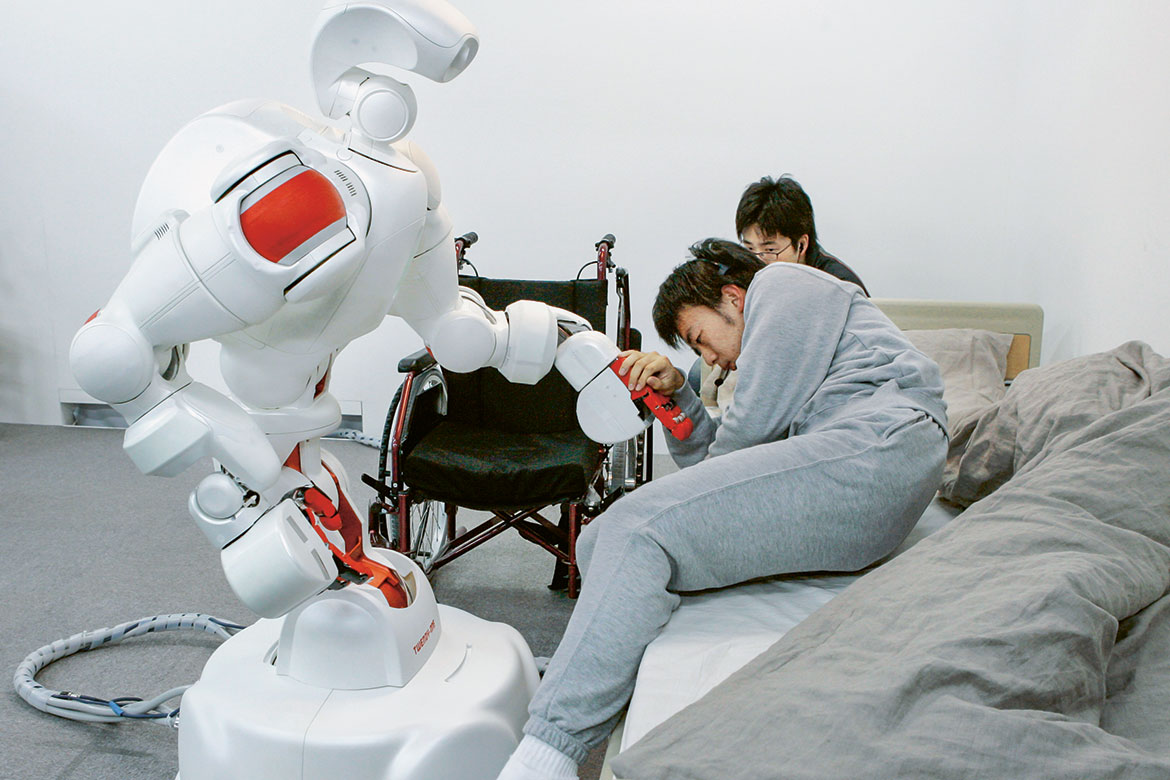

Automatic emotional recognition will have a number of potential applications: customer services, marketing, surveillance, caring for the elderly and even medicine. “For one thing, it will pick out the first signs of an episode of anxiety or depression”, says David Sander, director of NCCR Affective Sciences and of the University of Geneva’s inter-faculty research centre.

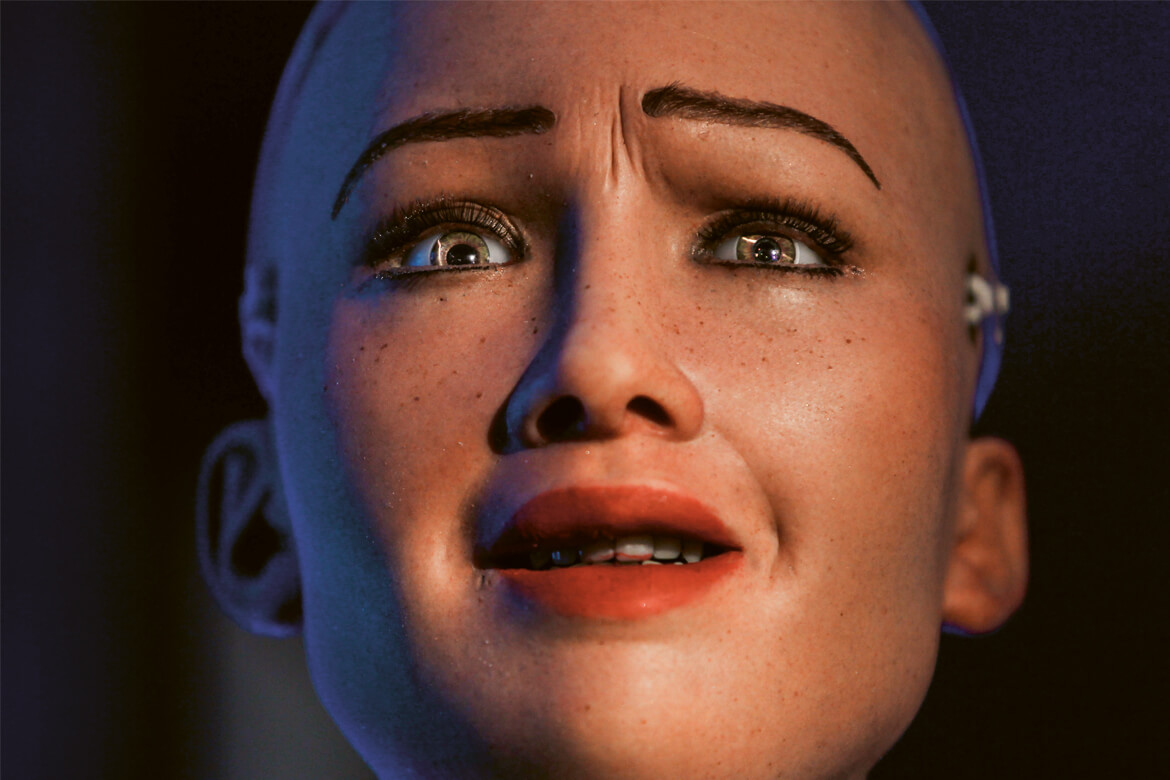

Over at EPFL, Jean-Philippe Thiran is conducting similar research but this time on the recognition of facial expressions. He has partnered up with the automobile industry, which shares his interest in emotion recognition. Thiran says their aim is “to collate information about the driver. In semi-autonomous cars, for example, it’s extremely important to know the emotional state of the person behind the wheel when handing over control of the vehicle: are they stressed, and can they take decisions?”. Irritated drivers might then find that the car speakers start playing relaxing music, while tired drivers might be stimulated by having the dashboard glow slightly brighter.

“Today, research in this field has advanced to a stage where we’re tackling the recognition of facial expressions in unfavourable conditions such as in poor lighting, while moving, and from acute angles. Not to mention those quirky and subtle facial expressions”, adds Thiran. Algorithms are indeed moving forward quickly, but they are not yet capable of interpreting the full range of the emotions that make up everyday situations, whether revealed by the voice or by the face.

Sophie Gaitzsch is a journalist in Geneva.