Feature: Rationalising emotion

Trust is everything

Whether we like it or not, machines and artificial intelligence can awaken emotions in us, even a sense of trust. This can be advantageous, but it can also lead to misunderstandings and makes us targets for manipulation.

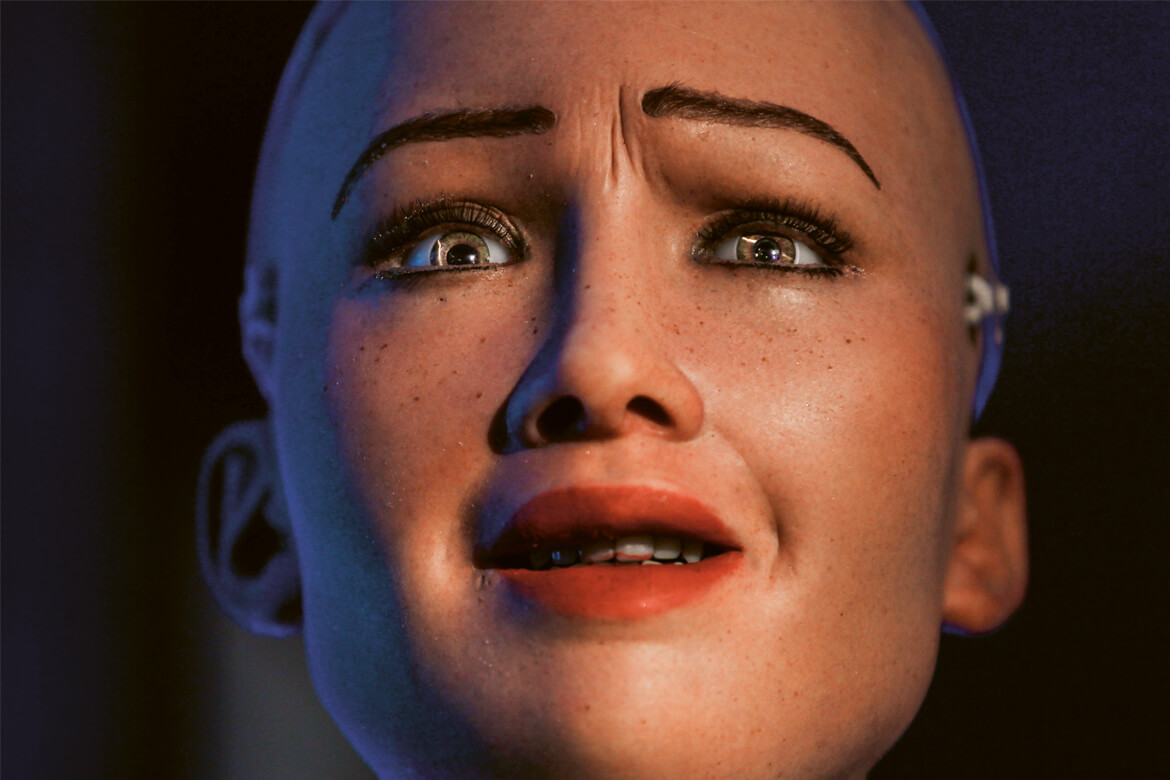

For many people, the robot Sophia is discomforting. She also confirms the theory of the ‘uncanny valley’: we struggle to accept human-like robots as they approach indistinguishability, but not when they reach it. | Keystone/AP Photo/Niranjan Shrestha

Do you swear at your computer when it doesn’t function like it should? Do you laugh when the language assistant on your smartphone gives you crazy answers? Do you even wish your robot lawnmower “good night” when he goes to sleep at his charging station? You’re not alone. “Human beings have a natural inclination to treat machines like other people”, says Martina Mara, a professor of robot psychology at the University of Linz. This is because the human brain is hardwired for social interactions and reacts instinctively to appropriate stimuli, such as movement. If our robot lawnmower happens to come towards us, it’s clearly because he wants to make contact. “It takes very little for us to get the impression that machines have intentions and feelings”, says Mara.

Games with guilt

That’s not all. They also trigger emotions in us, even when we are completely aware that they aren’t living creatures. This is confirmed by Elisa Mekler, a psychologist who runs the Human-Computer Interaction Research Group at the University of Basel. One of her research interests is the relationships that her test subjects establish when playing computer games. “The feelings that they describe are at times incredibly intense”. They’re the same feelings that we have towards people, ranging from sympathy and pride to fear and even guilt when something bad happens to the figures in the game.

Emotional events – both positive and negative – remain vividly in the memory. For example, if we have a bad experience in an online shop because we can’t find our way around, or if the robot assistant doesn’t understand what we want, then we get annoyed and frustrated and don’t want to use the product in question any more. “That’s why designers and manufacturers are very interested in creating products that generate positive feelings”, says Mekler.

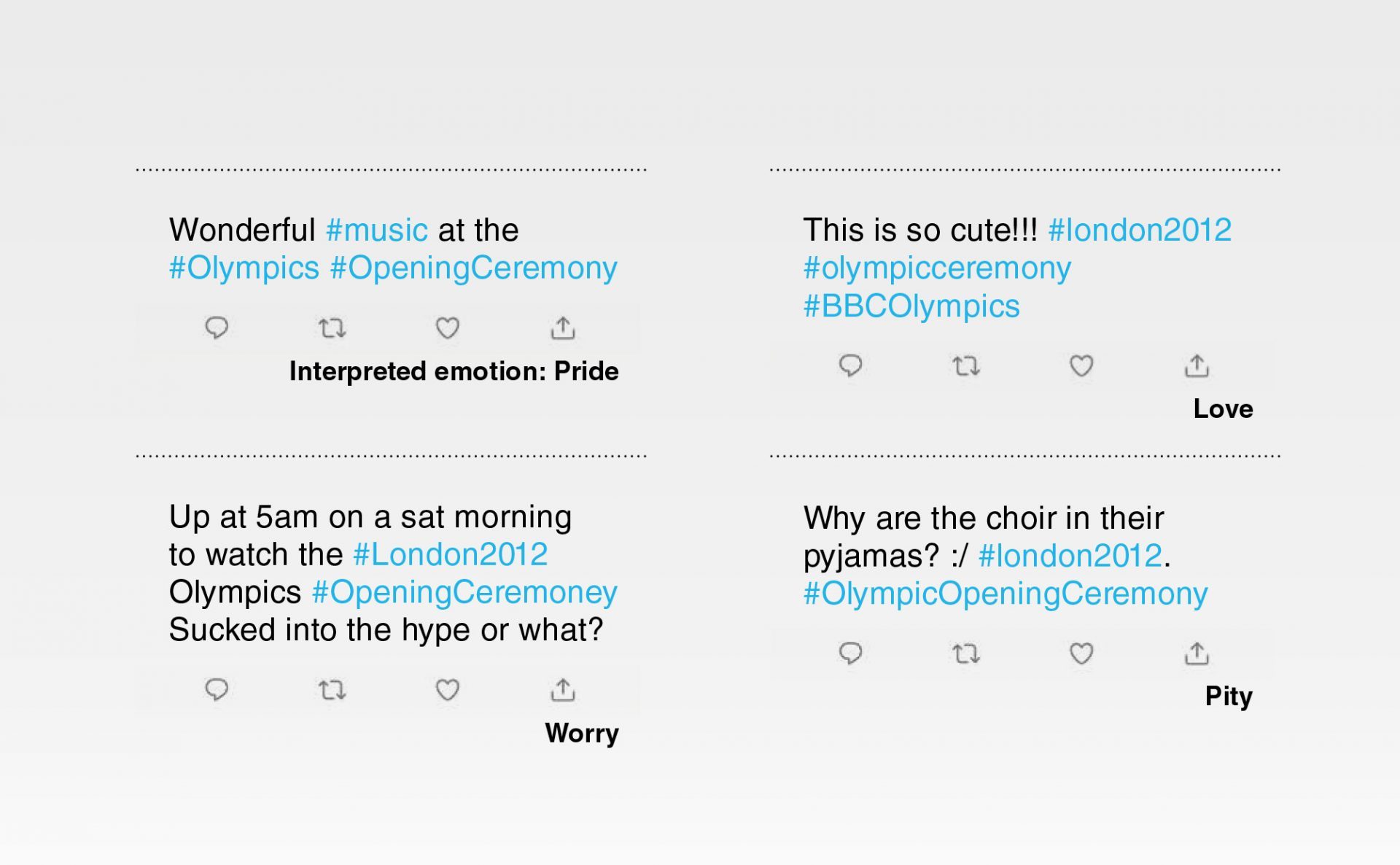

Most of these cases base their analyses on groups of hand-selected words that can signal certain feelings; others are based on machine learning. Many methods are relatively precise when it’s just a matter of deciding the difference between positive and negative emotions. Being able to discern individual emotions such as joy, anger or sadness is more difficult, however, especially when they are only implied. It is therefore almost impossible to decide on the meanings of ambiguous words, to recognise irony, or to take account of context. In order to master such complex tasks, some researchers are looking into the distinctive learning abilities of deep neural networks. This is a highly developed form of machine learning that can solve complex, non-linear problems.

Learning from emoticons

For example, Pearl Pu’s team at EPFL interpreted the feelings expressed in more than 50,000 tweets posted during the London 2012 Olympic Games. The algorithm uses the ‘distant learning’ method: i.e., it analysed reliable clues in the tweets, for example emoticons, before generalising and then moving on to interpret tweets containing only text (see examples below). Sometimes with some success.

This is also one of the goals of so-called affective computing. It wants artificial intelligence to learn to understand human feelings and react appropriately to them – or even simulate emotions itself. In order to decode emotions, algorithms rely on different sources of information such as facial expressions, physiological parameters such as the temperature and conductivity of the skin, and the human voice. Others try to recognise emotions in texts (see the above box).

The application possibilities are varied. Already, systems using cameras and sensors are in development that should detect when a car driver is getting tired or angry, and will insist on him taking a break. There are also algorithms that come across as empathetic, such as the Arabic-speaking chatbot ‘Karim’, made by the company X2AI, which is intended to offer help to Syrian refugees with symptoms of post-traumatic stress disorder. And in the not-too-distant future, emotion-sensitive robot nurses could recognise whether a patient is frightened or agitated, and would be able to adapt their behaviour accordingly.

Annoying misunderstandings

The automatic recognition of emotions might be taking off, but information on the context still often gets lost. For example, it’s almost impossible to decide whether someone is smiling because of either happiness or embarrassment. “As a result, it’s easy for misunderstandings to occur between people and machines”, says Mireille Betrancourt, a professor of IT and learning processes at the University of Geneva. As part of the EATMINT project, Betrancourt is researching into how emotions can be decoded during computer-assisted teamwork, or conveyed by users themselves to the machine that is their ‘opposite number’.

In one of Betrancourt’s experiments, a test person was frustrated because he could not solve a problem. The computer gave him an incongruous answer: “How amusing!”. That annoyed the test person, who promptly broke off communication. “Inappropriate reactions lead to a loss of trust”, says Betrancourt. That’s why emotion-sensitive systems have to draw absolutely correct conclusions. For as long as this isn’t possible, in her opinion it’s better to ask the users themselves about their feelings, rather than try and derive them indirectly from data.

Trust is everything

So trust is necessary if people are going to get properly to grips with intelligent technologies. Sometimes the degree of trust involved is even greater than would be the case if one were dealing with another person. This can be valuable for therapeutic purposes. This has been demonstrated by an American study with war veterans who conducted conversations with a virtual therapist – an avatar called Ellie. If the test subjects were informed that Ellie was controlled completely by computers, they related more memories of which they were ashamed than if they believed a human being was steering it – simply because they weren’t afraid of being judged morally.

This example also demonstrates the risks that too much trust brings with it. “Systems that interact with us like people can very quickly elicit highly personal information from us”, says Mekler. This raises hitherto unsolved questions about personal security and data security. The same applies to technologies that are intended to recognise feelings automatically. How secure is the data that voice-analysis software or an emotion-sensitive car has gathered about me? Who has access to it? A study carried out by the University of Siegen has shown that potential users expect their data to be stored securely, and not passed on to third parties. It’s only under these conditions that they would be prepared to use emotion-sensitive technologies.