Virtual reality

Virtual worlds replacing field work

Is the deepfake applicant likeable because she smiles? Will I find the exit better if the building is remodelled especially for this purpose? Using virtual models in scientific studies can let us test very different versions of people or buildings. There’s a veritable boom in virtuality today – but there are a lot of caveats.

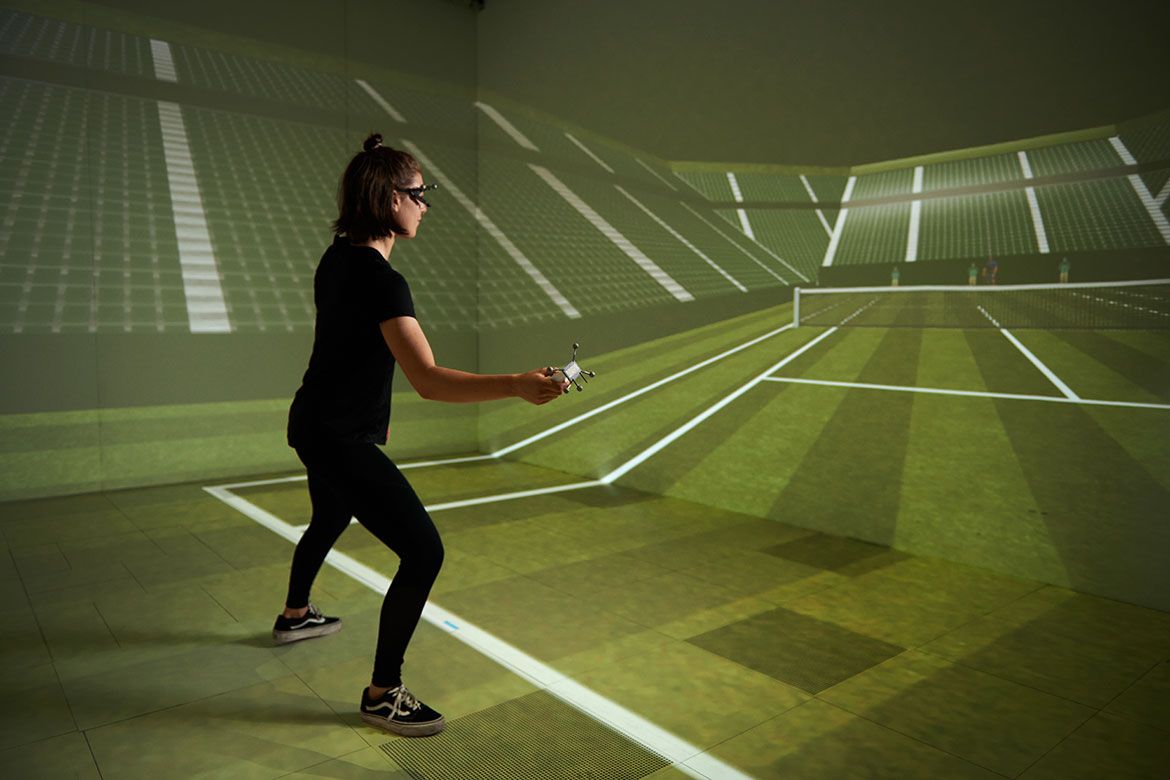

Wow, so many people! What exactly happens when a person goes on stage and has to give a lecture? Today, virtual worlds can be used to investigate this in psychological experiments, for example. | Photo: Vizard

The task is always the same. Some 150 participants have to find a room in the Sanaa Building, a structure in Essen in Germany that has more than 130 different windows and four levels with varying ceiling heights. It’s designed as a concrete cube and is considered an architectural masterpiece. “It’s a wonderful but difficult building”, says Christoph Hölscher, a professor of cognitive science at ETH Zurich. If you want to get from the bright auditorium on the ground floor to a meeting in a room on the third floor, for example, you soon lose any sense of orientation.

That is why Hoelscher and his team have recreated the Sanaa’s concrete cube in a virtual model. One of its fundamental problems is that once you’re inside it, you don’t have a decent overview of how the building functions as a whole. So the researchers have replaced the closed staircase with a visually transparent one, and have inserted atriums into the ceilings in certain places so you can look up into the other floors. “You can’t simply insert an atrium in a real building”, says Hölscher. Their tests demonstrated that people can navigate through the building faster when you improve the lines of sight to important orientation points such as the stairwells. “Architecture ought to take into account how people function cognitively”, says Michal Gath-Morad, the head of the study.

Reduced to the max

In the cognitive sciences, social psychology, architecture and market research, virtual models are increasingly being utilised to conduct research, says Hölscher. Test subjects wearing VR goggles interact with virtual people and environments, enabling researchers to test human behaviour systematically. The scenarios in question are recreated using software from the gaming industry. According to Hölscher, the important thing is to transport people into another reality as accurately as possible. Experts speak here of the ‘objective degree of immersion’.

“Experimenting in virtual spaces has great advantages”, says Hölscher. “The experiments involved are usually simpler and thus cheaper to carry out. Each experiment has a clear framework, so it’s easily repeatable”. This is a major advantage in the sciences, where ‘reproducibility’ has been a major topic of debate for years now. Some experiments – like Hölscher’s – would be difficult or even impossible to carry out in reality. After all, you’re not easily going to find a building owner who’s willing to drill a hole in their ceiling just for test purposes.

By using virtual settings as their model, the cognitive and social sciences are methodologically taking a path similar to the natural sciences. Researchers in the life sciences often work with model organisms when they want to understand fundamental processes. Hölscher primarily adopted the spatial structure and the central visual axes from the building in Essen, while omitting the wall colours and the texture of the flooring. He has thus reduced his experiment to its essential components. Depending on the topic he wants to investigate, he can also add other details.

If the researchers are going to get useful results from virtual models, they have to find out what factors they can meaningfully depict in a VR experiment. That means they also have to be realistic about assessing the limits of the method. “Virtual reality is always just a model – like models of the atom help us to understand reality in physics without our having to reproduce them completely”, says Jascha Grübel of Wageningen University. He is assessing how to apply computer technologies in very different research areas, and he’s currently working with Hölscher at ETH Zurich. The latter adds: “In the model, you can use controlled variants that are clearly different from each other. By comparing them, we can distinguish fundamental patterns of behaviour”.

Too many variables under real conditions

Now we switch to a laboratory in Lausanne. A young woman with dark eyes looks at you, blinks, nods briefly, and smiles a little. Nothing more. The next time, she’s no longer smiling, no longer looking at you, but sideways past you. These are two scenes from a psychological experiment conducted by Marianne Schmid Mast, a behavioural scientist at the University of Lausanne, who wants to find out how nodding, smiling and establishing direct eye contact can affect a job interview. Her test subjects take turns to assess various virtual people such as this dark-eyed woman, then they have to answer questions on what behaviour they find the more likeable, and which of the people they’ve seen they would be more likely to hire.

In principle, this experimental set-up is the classical way of understanding the rules of social interaction – whether at the workplace or in job interviews. But in Schmid Mast’s lab, the participants of her studies are increasingly watching AI-generated videos instead of videos of real people acting out their scenes. Software can take a photo of anyone and use it to create a ‘deepfake’ that will execute a specific pattern of movements. The background is usually kept pretty neutral. “What’s important is that nothing distracts the test subjects from the actual experiment”, says Schmid Mast. “It’s fine if the scene looks quite simple. Sometimes, that even helps”. Anything potentially irritating could catapult the viewer out of the experiment. Even an avatar that’s too perfect would be an irritant – this is an effect that the researchers call the ‘uncanny valley’. It also applies to architecture, says Hoelscher: “Sketches are often better than perfect virtual renderings to give people a good impression of what you want”.

Once it’s been programmed, the behaviour to be studied can be tested with all manner of avatars: men or women, light-skinned or dark-skinned. If the same experiments were carried out using real people, the researchers would have to get different actors to practise the predefined facial gestures, and they’d all have to perform them in the same way every time. That wouldn’t be an easy task, and above all it would be expensive. “But our deepfake videos can also combine different yet specific behavioural characteristics, enabling us to examine their impact in a controlled manner”, says Schmid Mast.

When you’re analysing non-verbal communication, you have to be able to translate nervousness or self-confidence into a combination of different gestures. “It’s only when you can standardise behaviour that you can draw causal conclusions”, says Schmid Mast. Observations in field tests – in other words, in real-life situations – are often too complex. Too many other variables can influence things, resulting in interpretations of behaviour that are in fact based solely on random phenomena. But controlled conditions allow you to measure clear outcomes.

All the same, Schmid Mast is aware of the limits of AI experiments in her field. It’s still difficult to create deepfakes of social interaction between two people, for example. The smiles generated for her experiment are also still somewhat restrained because smiles in which the teeth are visible soon become creepy. So is it also possible that the limitations of technology are co-determining the topics she’s addressing? Schmid Mast assures us that this wouldn’t be allowed to happen; if necessary, they could still switch back to using people in their experiments. But the current rapid developments in what’s technologically possible mean that experiments with AI-generated videos are becoming increasingly important in her field.

There’s already a boom in research with AI-generated videos and virtual reality. Virtual cityscapes are being created for a study investigating how public health is influenced by order and disrepair in a city. And virtual models are being used to find out whether new wind turbines and solar installations are perceived more positively when they’re constructed in either built-up surroundings or out in Nature. The spectrum of potential applications is vast.

Ethical boundaries also figure here

However, Grübel also draws our attention to repeated instances of studies proving that there are important differences in how we perceive things in VR and in the real world. For example, physical movement in a virtual environment triggers activity in areas of the brain different from those that are active during real physical movement. It remains unclear whether this actually poses a problem. “Perhaps the brain processes the real world differently on a neurological level, even though the results on a cognitive, decision-making level are similar”.

Voices, smells, crowds of people, fluctuations in the temperature – these are all factors that can also have an impact on studies in ways that cannot always be determined clearly, says Grübel. “Data from the real world have great added value because of this, and they can show us where our models simplify things too much”. Future technologies might be able to reproduce some of these factors. For example, the Delft University of Technology in the Netherlands is working on a VR simulator that can replicate odours and temperature. But a completely believable virtual world could also trigger panic in test subjects, says Grübel. You only have to imagine what it would be like to experience a ‘virtual’ fire in the Sanaa building that featured seemingly real smoke and heat. So there are instances after all when virtual settings also reach the limits of what is ethically justifiable.