DEBATE

Should publishers hand over scientific output to AI companies to train their models?

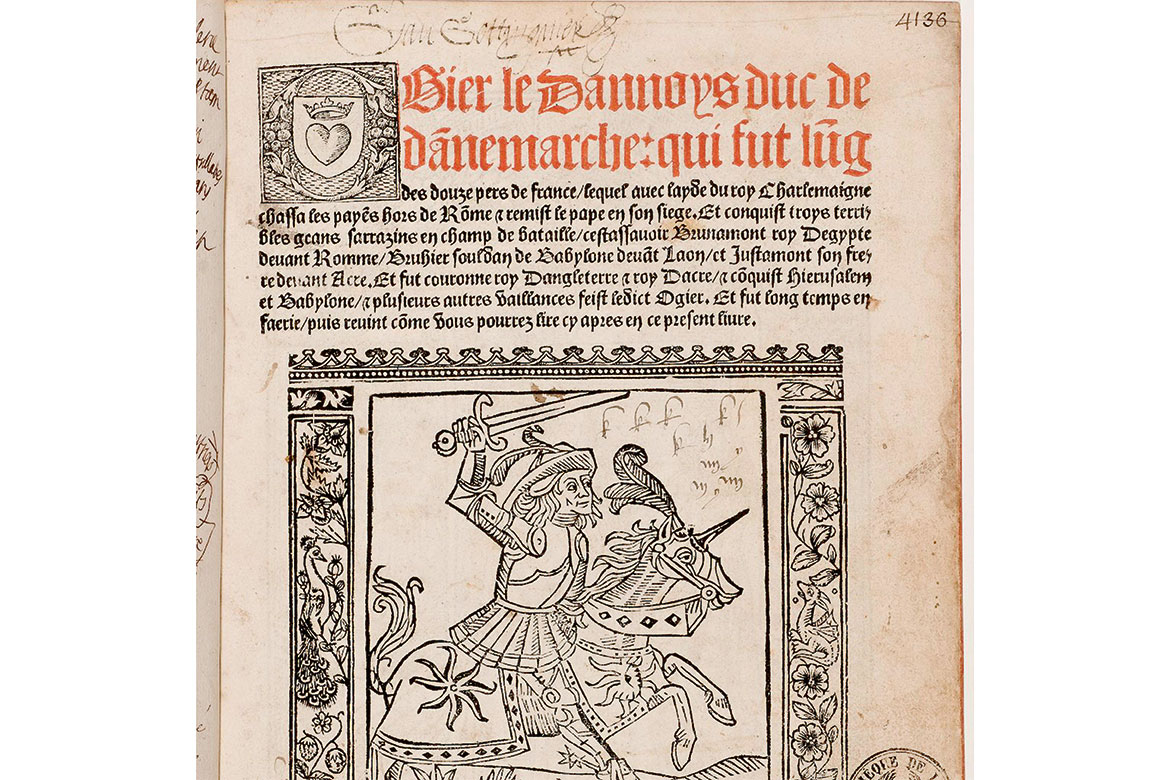

Image: Provided by subject

Image: Provided by subject

Publishers hold vast troves of scientific data, mostly taxpayer-funded, behind paywalls. This wealth of information represents countless hours of research and billions in public money. It’s a vital resource that should be openly accessible for both human analysis and AI processing, to accelerate scientific progress and innovation. The potential benefits of opening up this data are tremendous. AI systems can rapidly process and analyse large volumes of scientific literature, uncovering hidden connections across disciplines that human researchers might otherwise miss. This capability, combined with human expertise, could dramatically accelerate the pace of scientific discovery. Researchers would benefit from unrestricted access to the latest findings in their fields, fostering innovation and collaboration. Moreover, AI could help manage the overwhelming volume of published research, assisting scientists in staying up to date with developments in their areas of study. Given the pressing global challenges we face, we cannot afford to leave this wealth of knowledge untapped or restricted.

Although the concerns about legal issues and academic integrity are valid, they are not insurmountable. We can address these challenges through careful guidelines for AI use and proper attribution systems. The success of open-access models in computer science – where cutting-edge research is often freely available through preprint servers like arXiv – demonstrates that quality and innovation can thrive alongside openness.

In conclusion, yes, publishers should provide their scientific output to AI companies and researchers – free of charge. The enormous mass of existing data that publishers hold, largely funded by public resources, should be treated not as a commodity but as a vital resource for advancing human knowledge. By making their existing archives freely available for both human analysis and AI processing, publishers can unlock the full potential of our collective scientific efforts and accelerate innovation and discovery.

Imanol Schlag is a researcher from the ETH AI Center and co-lead of the Swiss AI Initiative’s Large Language Model project. Previously, he conducted research at Microsoft, Google and Meta.

In recent months, the news spread that the publisher Taylor & Francis had struck a deal worth USD 10 million with Microsoft, granting it access to Routledge books for training large language models (LLM). Other publishing houses have made similar deals. These practices, however, carry significant risks and side-effects that require more public attention and debate. First, handing over scientific work to the largest AI companies will only increase their hold over the knowledge domain and the concentration of power in the hands of a smattering of corporations. These corporations are becoming the de facto informational infrastructure that decides which knowledge and data are deemed to be of worth – and which are not. Moreover, the building of this AI infrastructure is consuming planetary resources at an unsustainable pace. Providing research output to these corporations will only exacerbate these changes.

Secondly, science is increasingly investing in research ethics: in terms of the ethical collection, analysis, processing and storage of research data, for instance. The care and work necessary is highly asymmetrical compared to the surreptitious training of LLMs on research output, where at present hardly any ethical regulations exist. This creates serious problems, e.g., participants who have never signed up for their data to be used as training material and who have no idea that their data are flowing into LLMs.

Finally, repurposing scientific output as training data is a form of extraction of human labour, whereby publishers have found yet another way to monetise publicly funded research activity. Many academics already undertake considerable unpaid work for publishing houses in order to safeguard the quality of scientific papers, which are subsequently sold for profit, in addition to already being behind a paywall or requiring considerable open access fees. If publishing houses are to continue these practices, scientists must at least be able to explicitly opt out.

Mathias Decuypere is a professor of school development and governance at the Zurich University of Teacher Education. His research focuses on how digital data and platforms are increasingly influencing education.

Photo: ZVG

Publishers hold vast troves of scientific data, mostly taxpayer-funded, behind paywalls. This wealth of information represents countless hours of research and billions in public money. It’s a vital resource that should be openly accessible for both human analysis and AI processing, to accelerate scientific progress and innovation. The potential benefits of opening up this data are tremendous. AI systems can rapidly process and analyse large volumes of scientific literature, uncovering hidden connections across disciplines that human researchers might otherwise miss. This capability, combined with human expertise, could dramatically accelerate the pace of scientific discovery. Researchers would benefit from unrestricted access to the latest findings in their fields, fostering innovation and collaboration. Moreover, AI could help manage the overwhelming volume of published research, assisting scientists in staying up to date with developments in their areas of study. Given the pressing global challenges we face, we cannot afford to leave this wealth of knowledge untapped or restricted.

Although the concerns about legal issues and academic integrity are valid, they are not insurmountable. We can address these challenges through careful guidelines for AI use and proper attribution systems. The success of open-access models in computer science – where cutting-edge research is often freely available through preprint servers like arXiv – demonstrates that quality and innovation can thrive alongside openness.

In conclusion, yes, publishers should provide their scientific output to AI companies and researchers – free of charge. The enormous mass of existing data that publishers hold, largely funded by public resources, should be treated not as a commodity but as a vital resource for advancing human knowledge. By making their existing archives freely available for both human analysis and AI processing, publishers can unlock the full potential of our collective scientific efforts and accelerate innovation and discovery.

Imanol Schlag is a researcher from the ETH AI Center and co-lead of the Swiss AI Initiative’s Large Language Model project. Previously, he conducted research at Microsoft, Google, and Meta.

Photo: ZVG

In recent months, the news spread that the publisher Taylor & Francis had struck a deal worth USD 10 million with Microsoft, granting it access to Routledge books for training large language models (LLM). Other publishing houses have made similar deals. These practices, however, carry significant risks and side-effects that require more public attention and debate. First, handing over scientific work to the largest AI companies will only increase their hold over the knowledge domain and the concentration of power in the hands of a smattering of corporations. These corporations are becoming the de facto informational infrastructure that decides which knowledge and data are deemed to be of worth – and which are not. Moreover, the building of this AI infrastructure is consuming planetary resources at an unsustainable pace. Providing research output to these corporations will only exacerbate these changes.

Secondly, science is increasingly investing in research ethics: in terms of the ethical collection, analysis, processing and storage of research data, for instance. The care and work necessary is highly asymmetrical compared to the surreptitious training of LLMs on research output, where at present hardly any ethical regulations exist. This creates serious problems, e.g., participants who have never signed up for their data to be used as training material and who have no idea that their data are flowing into LLMs.

Finally, repurposing scientific output as training data is a form of extraction of human labour, whereby publishers have found yet another way to monetise publicly funded research activity. Many academics already undertake considerable unpaid work for publishing houses in order to safeguard the quality of scientific papers, which are subsequently sold for profit, in addition to already being behind a paywall or requiring considerable open access fees. If publishing houses are to continue these practices, scientists must at least be able to explicitly opt out.

Mathias Decuypere is a professor of school development and governance at the Zurich University of Teacher Education. His research focuses on how digital data and platforms are increasingly influencing education.